Ads are inevitable in AI, and that's okay

Convergent evolution in LLMs will get us there

We are going to get ads in our AI. It is inevitable. It’s also okay.

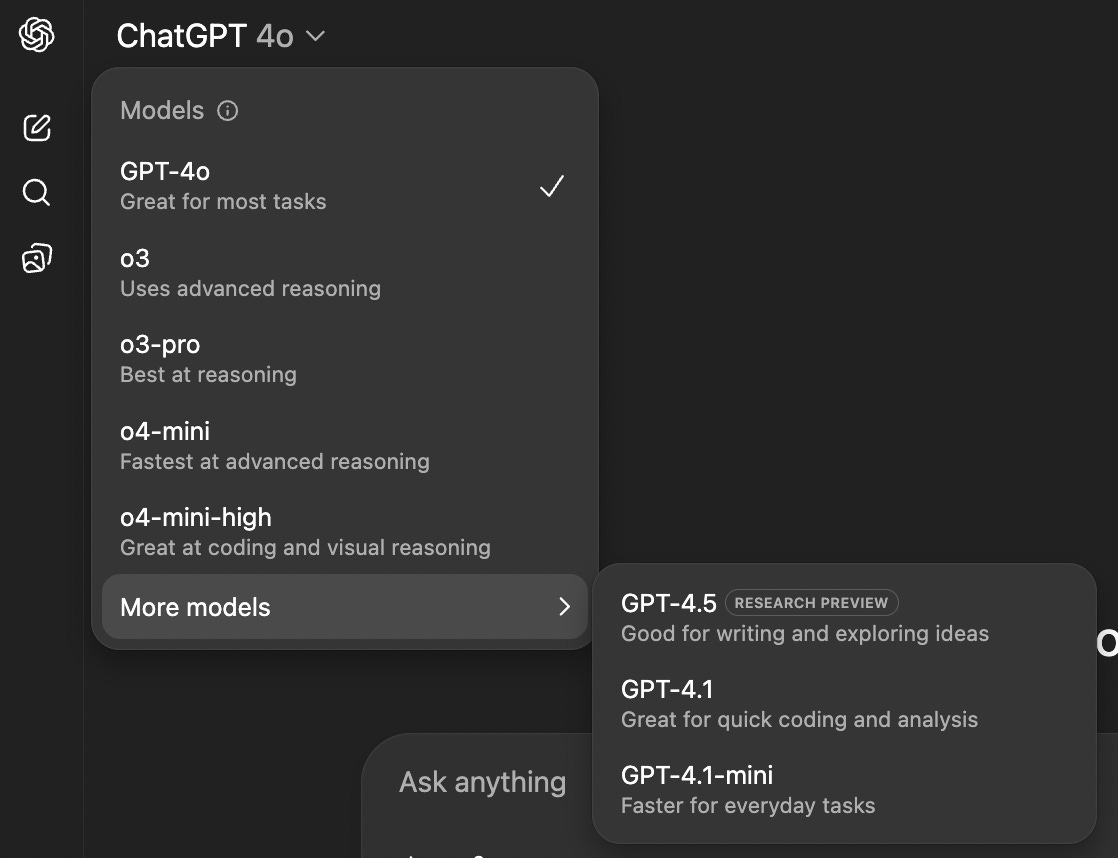

OpenAI, Anthropic and Gemini are in the lead for the AI race. Anything they produce also seems to get copied (and made open source) by Bytedance, Alibaba and Deepseek, not to mention Llama and Mistral. While the leaders have carved out niches (OpenAI is a consumer company with the most popular website, Claude is the developer’s darling and wins the CLI coding assistant), the models themselves are becoming more interchangeable amongst them.

Well, not quite interchangeable yet. Consumer preferences matter. People prefer using one vs the other, but these are nuanced points. Most people are using the default LLMs available to them. If someone weren’t steeped in the LLM world and watching every move, the model-selection is confusing and the difference between the models sound like so much gobbledegook.

One solution is to go deeper and create product variations that others don’t, such that people are attracted to your offering. OpenAI is trying with Operator and Codex, though I’m unclear if that’s a net draw, rather than a cross sell for usage.

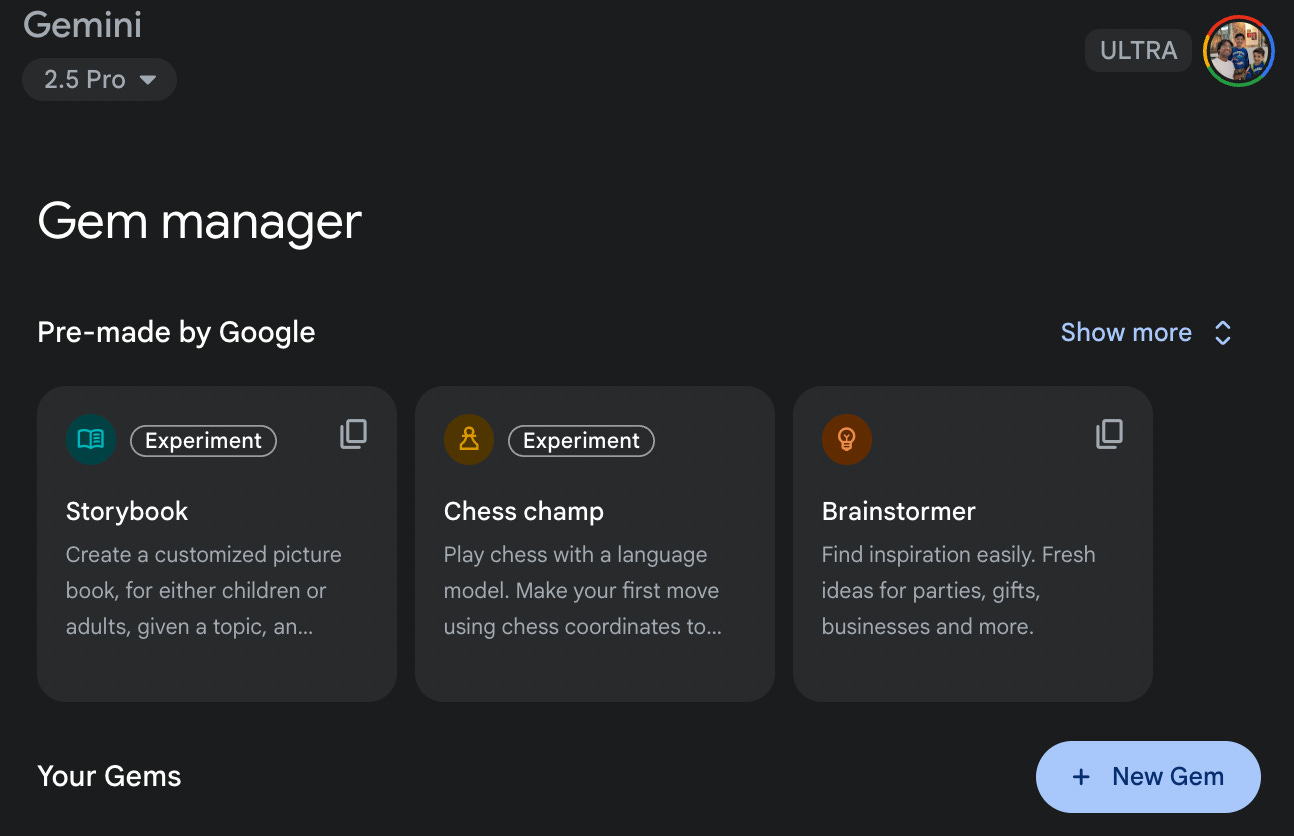

Gemini is also trying, by introducing new little widgets that you might want to use. Storybook in particular is really nice here, and I prefer it to their previous knockout success, which was NotebookLM.

But this is also going to get commoditised, as every large lab and many startups are going to be able to copy it. This isn’t a fundamental difference in the model capabilities after all, it’s a difference in how well you can create an orchestration. That doesn’t seem defensible from a capability point of view, though of course it is from a brand point of view.

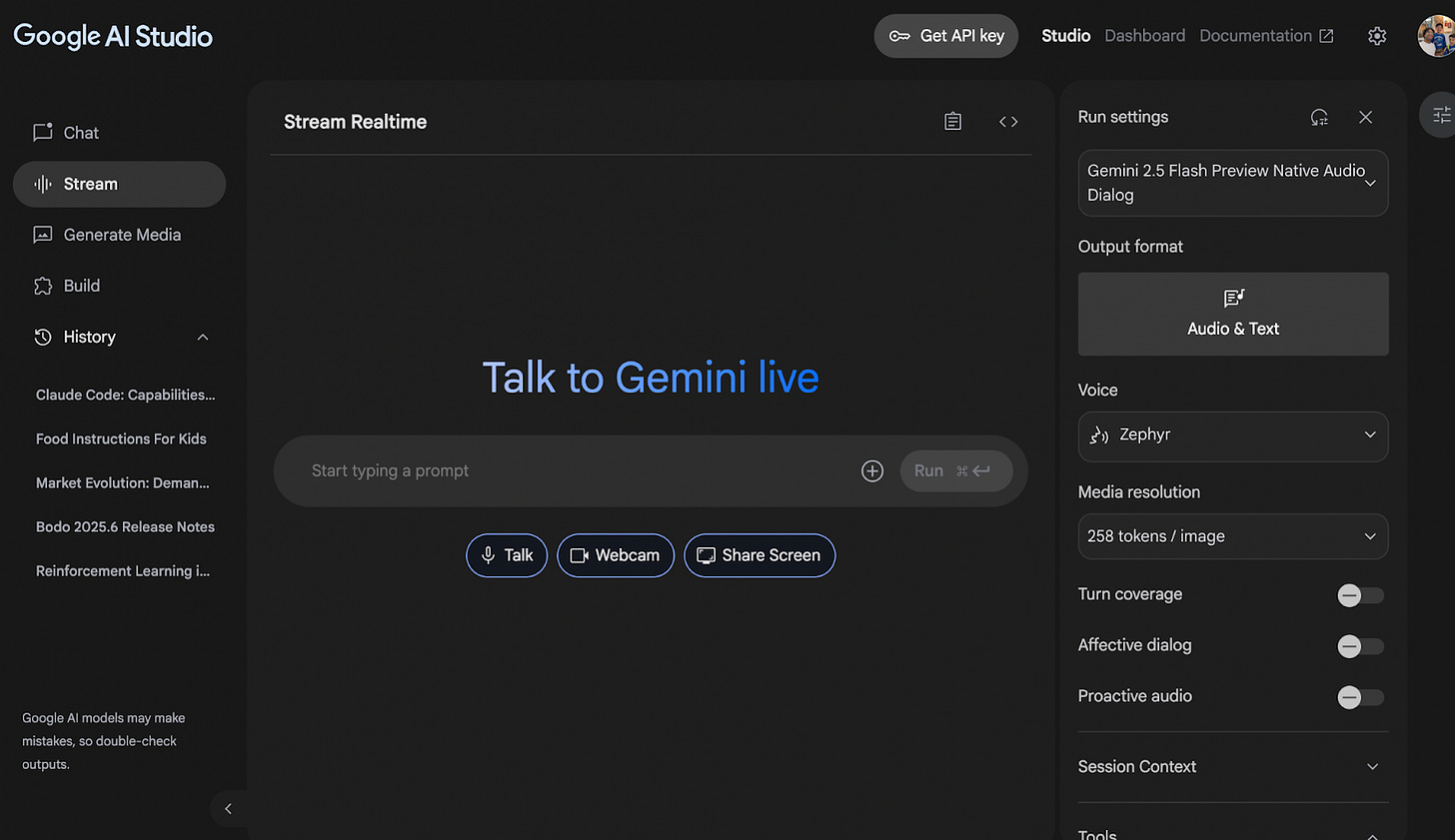

Another option is to introduce new capabilities that will attract users. OpenAI has Agent and Deep Research. Claude has Artefacts, which are fantastic. Gemini is great here too, despite their reputation, it also has Deep Research but more importantly it has the ability to talk directly to Gemini live, show yourself on a webcam, and share your screen. It even has Veo3, which can generate vidoes with sound today.

I imagine much of this will also get copied by other providers if and when these get successful. Grok already has voice and video that you can show to the outside world. I think ChatGPT also has it but I honestly can’t recall while writing this sentence without looking it up, which is certainly an answer. Once again these are also product design and execution questions about building software around the models, and that seems less defensible than even the model building in the first place.

Now, if the orchestration layers will compete as SaaS companies did over consumer attraction and design and UX and ease and so on, the main action remains the models themselves. We briefly mentioned they’re running neck and neck in terms of the functionality. I didn’t mention Grok, who have billions and have good models too, or Meta who have many more billions and are investing it with the explicit aim of creating superintelligence.

Here the situation is more complicated. The models are decreasing in price extremely rapidly. They’ve fallen by anywhere from 95 to 99% or more over the last couple years. This hasn’t hit the revenues of the larger providers because they’re releasing new models rapidly at higher-ish prices and also extraordinary growth in usage.

This, along with the fact that we’re getting Deepseek R1 and Kimi-K2 and Qwen3 type open source models indicates that the model training by itself is unlikely to provide sufficiently large enduring advantage. Unless the barrier simply is investment (which is possible).

What could happen is that the training gets expensive enough that these half dozen (or a dozen) providers decide enough is enough and say we are not going to give these models out for free anymore.

So the rise in usage will continue but if you’re losing a bit of money on models you can’t make it up in volume. So it’ll tend down, at least until some equilibrium.

Now, by itself this is fine. Because instead of it being a saas-like high margin business making tens of billions of dollars it’ll be an Amazon like low margin business making hundreds of billions of dollars and growing fast. A Costco for intelligence.

But this isn’t enough for owning the lightcone. Not if you want to be a trillion dollar company. So there has to be better options. They could try to build new niches and succeed, like a personal device, or a car, or computers, all hardware like devices which can get you higher margins if the software itself is being competed away. Even cars! Definitely huge and definitely being worked on.

And they’re already working on that. This will have uncertain payoffs, big investments, and strong competition. Will it be a true new thing or just another layer built on top of existing models remains to be seen.

There’s another option, which is to bring the best business model we have ever invented into the AI world. That is advertising.

It solves the problem of differential pricing, which is the hardest problem for all technologies but especially for AI, which will see a few providers who are all fighting it out to be the cheapest in order to get the most market share while they’re trying to get more people to use it. And AI has a unique challenge in that it is a strict catalyst for anything you might want to do!

For instance, imagine if Elon Musk is using Claude to have a conversation, the answer to which might well be worth trillions of dollars of his new company. If he only paid you $20 for the monthly subscription, or even $200, that would be grossly underpaying you for the privilege of providing him with the conversation. It’s presumably worth 100 or 1000x that price.

Or if you're using it to just randomly create stories for your kids, or to learn languages, or if you're using it to write an investment memo, those are widely varying activities in terms of economic value, and surely shouldn't be priced the same. But how do you get one person to pay $20k per month and other to pay $0.2? The only way we know how to do this is via ads.

And if you do it it helps in another way - it even helps you open up even your best models, even if rate limited, to a much wider group of people. Subscription businesses are a flat edge that only captures part of the pyramid.

We can even calculate its economic inevitbaility. Ads have an industry mean CPC (cost per click) of $0.63. Display ads have click through rates of 0.46%. If tokens cost $20/1m for completion, and average turns have 150 counted messages, with 400 tokens each, that means we have to make $1.9 or thereabouts in CPC to break even per API cost. Now, the API cost isn’t the cost to OpenAI, but it means for same margins or better they’d have to triple the CPC.

Is it feasible for the token costs to fall by another 75%? Or for the ads via chat to have higher conversion than a Google display ad? Both seem plausible. Long‑term cost curves (Hopper to Blackwell, speculative decoding) suggest another 3× drop in cash cost per token by 2027. Not just for product sales, but even for news recommendations or even service links.

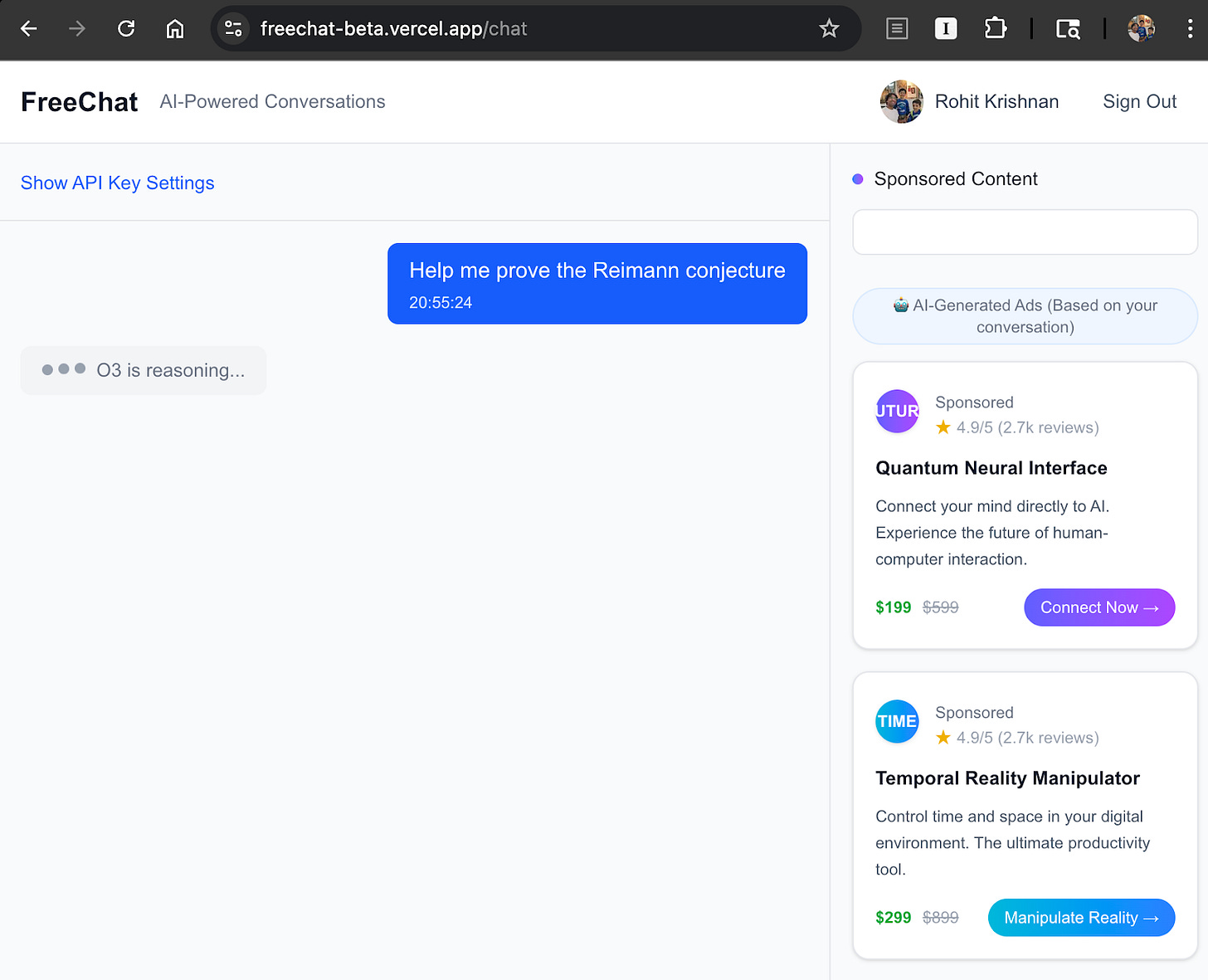

And what would it look like? Here’s an example. The ads themselves are AI generated (4.1 mini) but you can see how it could get so much more intricate! It could:

Have better recommendations

Contain expositions from products or services or even content engines

Direct purchase links to products or links to services

Upsell own products

Have a second simultaneous chat about the existing chat

A large part of purchasing already happens via ChatGPT or at least starts on there. And even if you’re not directly purchasing pots or cars or houses or travel there’s books and blogs and even instagram style impulse purchases one might make. The conversion rates are likely to be much (much!) higher than even social media, since this is content, and it’s happening in an extremely targeted fashion. Plus, since conversations have a lag from AI inference anyway, you can have other AIs helping figure out which ads make sense and it won’t even be tiresome (see above!).

I predict this will work best for OpenAI and Gemini. They have the customer mindshare. And an interface where you can see it, unlike Claude via its CLI12. Will Grok be able to do it? Maybe, they already have an ad business via X (formerly Twitter). Will it matter? Unlikely.

And since we'll be using AI agents to do increasingly large chunks of work we will even see an ad industry built and focused on them. Ads made by AI to entice other AIs to use them.

Put all these together I feel ads are inevitable. I also think this is a good thing. I know this pits me against much of the prevailing wisdom, which thinks of ads as a sloptimised hyper evil that will lead us all into temptation and beyond. But honestly whether it’s ads or not every company wants you to use their product as much as possible. That’s what they’re selling! I don’t particularly think of Slack optimising the sound of its pings or games A/B testing the right upskill level for a newbie as immune to the pull of optimisation because they don’t have ads.

Now, a caveat. If the model providers start being able to change the model output according to the discussion, that would be bad. But I honestly don't think this is feasible. We're still in the realm where we can't tell the model to not be sycophantic successfully for long enough periods of time. People are legitimately worried, whether with cause or not, about the risk of LLMs causing psychosis in the vulnerable.

So if we somehow created the ability to perfectly target the output of a model to make it such that we can produce tailored outputs that would a) not corrupt the output quality much (because that’ll kill the golden goose), and b) guide people towards the products and services they might want to advertise, that would constitute a breakthrough in LLM steerability!

Instead what’s more likely is that the models will try to remain ones people would love to use for everything, both helpful and likeable. And unlike serving tokens at cost, this is one where economies of scale can really help cement an advantage and build an enduring moat. The future, whether we want it or not, is going to be like the past, which means there’s no escaping ads.

Being the first name someone recommends for something has enduring consumer value, even if a close substitute exists. Also the reason most LLM discourse revolves around 4o, the default model, even though the much more capable o3 model exists right in the drop down.

Also, Claude going enterprise and ChatGPT going consumer wasn’t something I’d have predicted a year and half ago.

I imagine you are right. One thing to note is that LLM use is largely a personal phenomenon, such that i can run a local model and get value even without internet. This is not the same as social media because there you have to use the same thing as everyone else, because that is what it is.

So as someone with an allergy to ads, i plan to use Claude/chatgpt etc until they introduce ads, at which point I will buy a GPU and run the best local model via some vibe coded app. The hope is that local models are good enough by then, which will be true very soon.

This will even be possible for nontechnical people since open source apps will and do exist.

So I dont know how much of an effect this will have in the big picture, but it very well could be significant, and its hard to say since it was not possible before with social media.

This is super interesting.