Symposium: It's hard to bet on human extinction

Human extinction, writing novels, falling inflation, making AI understand our instructions, and annals in dream engineering

Please treat this as an open thread! Do share whatever you’ve found interesting lately or been thinking about, or ask any questions you might have.

1/ The Extinction Tournament, by Astral Codex Ten

Scott has a really interesting post about the outcomes of a tournament held to forecast existential risks. It’s interesting because he has a p(doom) of 33% (i.e., he thinks there’s a 33% chance that we all die because of bad AI). However the best superforecasters in the world and experts came together and thought very hard and decided the range was more like 1-6%, with a wide range.

Now, the numbers are necessarily indicative, since nobody has a causal model of why we should expect this to be higher.

Scott is fairminded in his analyses and lays out his thought process which is what makes this interesting. In his words

This study could have been deliberately designed to make me sweat. It was a combination of well-incentivized experts with no ulterior motives plus masters of statistics/rationality/predictions. All of my usual arguments have been stripped away. I think there's a 33% chance of AI extinction, but this tournament estimated 0.3 - 5%. Should I be forced to update?

He concludes yes, a little bit, and comes down to 20%. Now, I don’t fully understand what difference that actually makes unless you were going to get Scott to take a bet, and any practical considerations you had don’t really change on the basis of this. I mean, you could argue for safety, but slightly less vehemently I suppose, though I don’t think he would know how to do it or others would understand that’s what he was trying to do.

“I’m being a little less vehement about what I want you to do” he would say, in his most reasonable voice, while people stare at him in wonder and pick between arbitrary binary choices they found in a nearby blogpost.

But in any case, you should know that superforecasters think there’s a 1% chance of human extinction the next century. So, you know, do with that data what you will. And in case you need more info, here’s a little bit more about extinction.

2/ Can novels make us better people? gets added to the annals of rhetorical questions

Washington Post wants to know, or at least a review of a Joseph Epstein’s book by Jacob Brogan wants to know. Continuing the proud tradition that all rhetorical questions can be answered with no, or a shrug of your shoulder, this too matches the beat.

But it made me think, how often is it that we keep coming across the same line of thought? Somewhere inside us we’re seemingly very uncomfortable with the idea that a thing exists because it is good and fun for us to have it exist. It needs to be given a moral weight, and consequently given a higher purpose.

Having posed the question, to which the answer is surely yes some novels can, the article however goes on to answer a different question, which is whether it should, using Epstein’s various annoyances at modern writing. These are legion, and includes sex scenes, which he seems to find pretty bad, even though presumably the human race kind sort of depends on it. In almost every way its a box trying to fit the whole of our existence inside it, trying to slice away whatever doesn’t fit.

For the eminent literary critic, there are few accomplishments more meaningful than producing a full-fledged theory of the novel. These are works that aspire to tell us how we read and why, to explain what literature offers and how it does it. Such projects tend toward the magisterial even when they are brief, and their authors — Georg Lukacs, Mikhail Bakhtin and others — lend their names to whole subfields of academic inquiry and argument.

Everyone wants to feel superior, and might as well use novels to do it as much as anything else! Objective standards might exist, but relying on others to define the objective standard only seems to end up in a self-referential morass. A novel exists, because the writer wanted to bring something new to the world. Readers might even like it, which is a bonus. To make this act of creation into a question of one person’s aesthetic standard is the biggest sin.

3/ Mortgage rates fall in the UK

This isn’t a personal finance blog, but still when the UK turns out to show lower rate of inflation than expected that’s a big move! Banks have much moved their expectations of non performing assets (people not being able to continue paying their monthly amounts as mortgage payments rise). Which means this is good news for a lot of people.

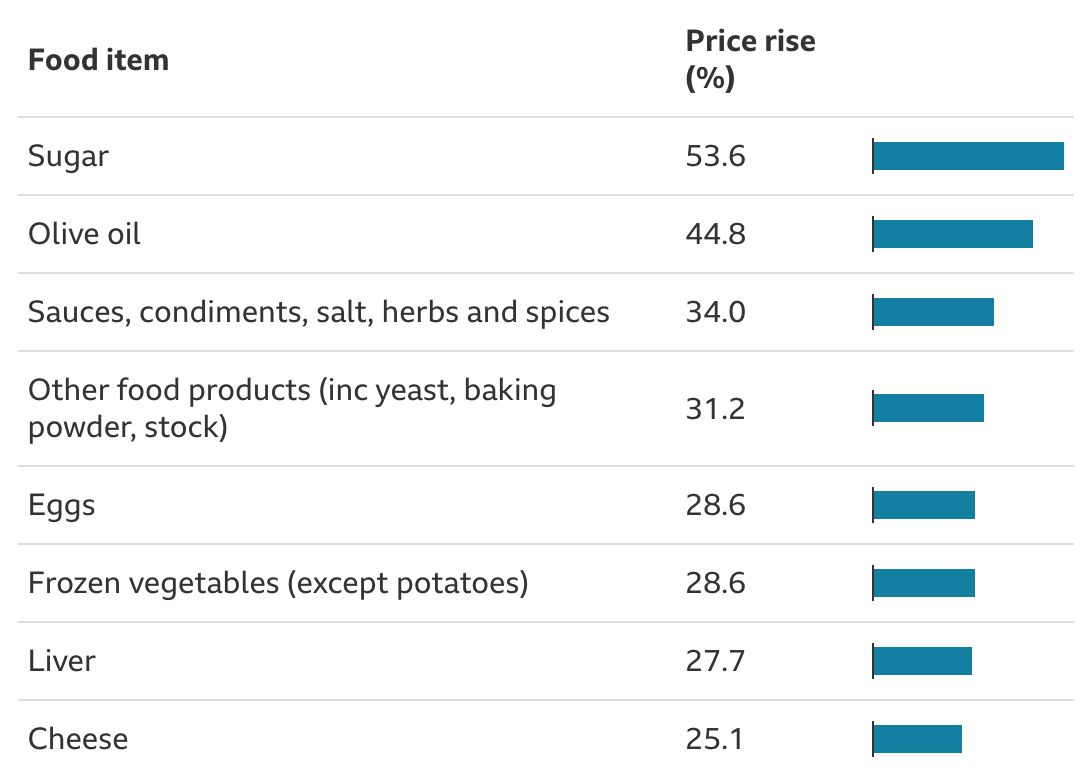

But the fact is that even with unemployment not particularly high, UK’s had a hard time as pay hasn’t kept up with inflation. In fact because of it.

4/ Sigh, more scientific research problems that are similar enough to but maybe not directly fraud

Stanford President Marc Tessier-Lavigne will resign effective Aug. 31, according to communications released by the University Wednesday morning. He will also retract or issue lengthy corrections to five widely cited papers for which he was principal author after a Stanford-sponsored investigation found “manipulation of research data.”

I do find it’s pretty incredible that this continues to be shown as true again and again and again. While I don’t really want to throw the baby out with the bathwater, the idea that the scientific establishment is so easily fooled by actual false data.

Now, I don’t particularly want to cast aspersions, but this seems bad.

The investigation took eight months, with one member stepping off after The Daily revealed that he maintained an $18 million investment in a biotech company Tessier-Lavigne cofounded. Reporting by The Daily this week shows that some witnesses to an alleged incident of fraud during Tessier-Lavigne’s time at the biotechnology company Genentech refused to cooperate because investigators would not guarantee them anonymity, even though they were bound by nondisclosure agreements.

One way out of this is to focus on better testing and monitoring, but this feels intrusive and adds more bureaucracy. Another’s to make everyone into gentlemen and gentlewomen, but that feels unlikely to happen as long as cheating brings such outrageous rewards.

Along with a large number of weasel-words and confusion and retractions and people being scared to speak up and manipulation, I’d add this to the list of why people don’t treat academia with all that much respect any longer.

5/ OpenAI creates custom instructions

A big problem with ChatGPT was that you couldn’t personalise it all that much. Now it has function calls that could be specified in order to get proper formatted results, Microsoft’s TypeChat, which uses definitions in codebase to retrieve structured AI responses that are safe, and custom instructions to help make ChatGPT respond to you appropriately.

It’s more or less in line with the advances that’s needed to make AI work the way we need them to as here.

This matters because us being able to use LLMs in more predictable ways is the secret to them going from interesting but unreliable genius curios to actual genius workhorses we need them to be! Add more colour from fully configurable models like Llama 2 from Meta and we are beginning to see the emergence of a whole new type of software stack!

6/ GitHub Copilot Chat is rolling out to more people

So a fun difference this time around is that every single incumbent seems to have been eagerly waiting to take advantage of the disruptions going around them, and have moved at lightning speed. They have partnered and developed their own versions of generative AI and integrated them into their tools so fast that it would’ve seemed silly if someone predicted it.

For instance, Microsoft partnered not just with OpenAI, but also Meta to use Llama 2, and have integrated it into all their products, in under a year (or maybe 6 months). This isn’t the old IBM type stasis. These aren’t dinosaurs either. They’re moving into areas so fast that they’re literally destroying startups left and right!

I can’t remember another time this happened. This is more common in the financial services sector, where new investors and structurers come up with clever products which get copied by Goldman as quickly as they realise it can make money. To see it happen in tech is new. And pretty awesome!

7/ Prompt engineering for humans

Imagine being a human who is unable to control your “autocompletions”. Like you end up having dreams but somehow complete to become nightmares.

And turns out you can prompt engineer your way to having better dreams! You can rescript them through a variant of CBT (cognitive behavioural therapy) and try rewrite the dreams or imagine new endings, and your brain goes “oh, that’s how I’m meant to complete this sequence” and the chaos becomes serene again.

Mostly though I found this stat scary.

Two to 6 percent of people have nightmares at least weekly.

But if you do, you can talk your way through it, which is good news. Any dream engineer is a fantastic term and I should probably adopt that too!

Hi Rohit. I published an essay this week talking about the virtues of public libraries, hopefully this is something everyone can agree is a good idea: https://howaboutthis.substack.com/p/public-libraries-awesome-things

.3 percent or 20 percent are both unacceptable high risk thresholds when we are discussing human extinction.

I suppose it's not all bad though, as I've noted here with regard to entropy, if all we are is a collection of self-aware atoms, the universe discovering itself and resisting its own entropy, than perhaps AI is better suited to fulfil this goal than we are.