A taxonomy of disinformation

Strategies employed to create and spread disinformation - an overview

I

How does disinformation actually spread? I'd taken a look at the ways in which persuasion actually plays out these days before, but the phenomenon of disinformation has intriguing enough characteristics of its own that stands separate.

Sometimes I think about this question in two modes - one is to dismiss it by thinking about those who are affected by it as not putting in enough cognitive effort. Then I remember I can't put in effort in any number of disciplines and am reliant on others and experts and general consensus. So I move to the other end, to think of this as a hack on the human brain that's impossible to overcome.

Disinformation, as an aside, is said to have originated from the Russian word, dezinformatsiya, from a KGB document, and got recognised internationally in the 1980s. The key difference was that it's the wilful dissemination of false information that's the issue. Intent is key here, not just the distribution.

While we’re having discussions on what constitutes wilful falseness and how to determine intent, the question is how we should actually analyse this phenomenon? Currently we mostly look at the fact that lies are created and spread in a relatively homogenous way. But they're not. Just like the varied strategies companies use to grow, all actions are strategies but differ in styles.

Taxonomies help categorise information so we can communicate easier with each other. And for sure, there are multiple articles talking about how disinformation is an issue. And about how we should combat it in social media. And about the mechanisms of disinformation diffusion in the society once it's unleashed by its agents. It's useful to find a way to assess what it might look like without relying on post-mortems.

An example of what this might look like is this particular framework by Hossein Derakhshan which tries to specify the types by the intended target and the agent perpetrating it.

Now that we know that, does that help us see how disinformation campaigns plays out? We still need to know a bit more about the actual actions taken.

For one thing it's interesting to see the tactics applied by everyone. For another I looked in vain to find a more comprehensive list of disinformation strategies, since it's common wisdom that we've been in the "fake news" era, but it's hard to find one. It'd sure be useful to find a way to assess what it might look like without relying on post-mortems.

II

Since 2020 (and some of the preceding years) was an absolute masterclass in how to create disinformation, I ended up marking a few different types that we saw and creating a taxonomy. As a natural experiment 2020 has been fascinating, and here are a few of the best battle tested disinformation strategies. Something a bit more actionable. This is one framework I find useful to think about the issue.

At the core is a strategy of completely deplatforming the opposition by creating a narrative that any effort to change your mind is by itself suspect.

The arrangement increases in efficacy and comprehensiveness as you go down the pyramid. The first two are ideal end points to change the entire narrative or change the information that people receive. They are dependent on finding and cultivating a large base. It's interesting to see that the common point amongst most of the tactics is to ensure you get control of the information processing that happens within the listener.

At the bottom level you're effectively speaking with people to try and disrupt the least susceptible. Here you need to actively impact their information processing priors.

The second level contains analysis to help the listeners figure out how they should use the information that's presented to them. This is useful to help push the thinking of "us vs them" to a more successful degree.

The third level is one that actively hampers the information itself that gets attention. It's designed to help create chaos and focus people's attention on the "show" so that all stories become either about the process itself, or gets a maximum of one or two news cycles. What this helps is in ensuring that the primed priors and analytic techniques only have intellectual vapour to actually analyse.

And the top level is the highest calling, which is create a conspiracy. It's not easy to do starting with this spot, but that's the end goal. Peter Thiel likes to say all great startups have a secret. So do all great conspiracies. Like a great video game they lead you down a series of questions and assertions and slow engagements that by the end you're fully bought in.

What is common amongst the strategies is the necessity to activate a certain group of people emotionally in order to help push the narrative deeper. Some methods work better once you have activated them, though going down the pyramid is a great way to create and reinforce the messages.

Seed information and watch it blossom

Otherwise known as the Russian interference strategy, also known as "Russia, what Russia, it's all true" strategy.

Also known for leaving sneaky anonymous hints all over the internet hoping one in a thousand take bloom. It's a strategy that does require some long term thinking and willingness to persevere, so might need several shallow pockets (note: not one deep pocket, the manpower is pretty cheap).

It's a little bit like an open source version of seeding chaos, in that it's rarely directed at a constructive end, since it's hard to be directional, but as a way to destroy some credibility for any movement it's pretty remarkably stable strategy.

One key piece of insight is that the things you say will find foothold somewhere in someone's corner of the mind. And ideas, once lodged, are remarkably hard to dislodge. Which means that as long as you create consistent enough offers it eventually will find some critical mass.

The effort to reward ratio here is unpredictable however. You'd have to spend an enormous amount of time seeding the world with your ideas in the hope that at some point in the future it pays off. The secret part is ideal, though not necessary, for certain types of disinformation but not all. So if Russia is planting information they won't want to make its provenance known. For a thought-leader in politics however, their name is an essential part of it.

Not to be outdone, no less a source than the US Defense Department has made this part of their plans.

This one also hit its peak a few years ago so has been retired temporarily in most places for anonymous offers. The strategy as a whole is also susceptible to what's known as a "sunlight disinfectant" problem, which is that if people know of it it can stop working (unless you're extra sneaky).

Examples: Russian disinformation campaigns

Repeated affirmations

Warning. It's really not that easy to lie bald facedly. There's a reason that even politicians, until fairly recently, had a habit of at least casting enough qualifiers about that their lies were couched in justifications.

Ah but no more. In an information based world you can just say what you want to be true vociferously enough and for long enough that except for those who actually know what happened, everyone else starts feeling doubts. Our presumption that people are to be trusted and that "smoke means fire" means that preponderance of noise generated works in your favour.

What this means is that if you can repeatedly say something is true, you can sway some amount of people towards your belief. Even if they don't travel the entire way, once cast the doubt stays. It's difficult for us to continually hold a position of disbelief towards confidently uttered words from semi-credible sources.

Making that emotional connection with your audience is also key. That's what allows the easier penetration of the message and the amplification to truly take shape.

Again, this is really really hard to do. You need to be steadfast in the face of overwhelming evidence if need be. No vacillation allowed. And you have to truly internalise that whole sticks and stones thing.

Example: The stolen 2020 US presidential election

Subtle denunciations to play both sides

So the hardest part of disinformation peddling is that once in a while someone calls you on it and you actually are going to have to bow to the pressure. So what do you do? You have to thread a fine needle. Give enough vague hints to all sides. Say you're sorry but not actually apologise. Explain why you're right. Explain rationales. Blame all sides.

Again, it's really hard to do a non apology apology at the best of times. The strategy here is to seem contrite, as if you're playing the game, while at the same time doing the bare minimum to pay it lip service.

In most endeavours we're playing by unwritten rules and norms. When we engage in conversation or debate or dialogue we're abiding by a set of rules we all agree with. You'd expect the other person to agree with you when you state a true thing. You'd think they'd accept logic. You would think that when they disagree with something they will state it so, and when they agree with something they will state it so. The commonality amongst all of these points is that you have to be willing to change your mind.

Which is why the subtler denunciations are harder to parse. It feel like you're playing the same game as another, but at the same time you're not. We can all tell when a boss or a spouse or a friend is paying lip service to something we're saying, agreeing without listening, or believing the opposite despite saying they don't. It speaks to the inner belief of a person so you can't even argue against it.

But when it's done it's a great tool to build a large movement with a core fanbase (who will read whatever they want into your messages), core adjacent base (who can legitimately claim ignorance) and a pliable enough opposition (who have to try to find a way to oppose something ethereal with something tangible).

Example: Scott Adams

Information avalanche

I mean look, it's only sensible. If you wanna do one shady thing while people are watching, it's difficult. That's what keeps a lot of us in line. You do something bad, you get caught, your cheeks turn red.

But there's a superior strategy. Just do a whole lot of things at once. Create an avalanche and completely bury those looking at you in pointless work trying to catch up to you. Nobody can keep up. And any flak you take will be minor, in amplitude and duration, if at all. Meanwhile you can use the barrage of things you actually are doing (or seem like you're doing) to your advantage. At worst it's a way to convert your incompetence points into "hard working but ..." points.

The strategy is to basically throw around accusations at light speed, much faster than they can be denied. The trick here is to engage your base and get them to agitate on your behalf.

It comes from a realisation that we're all human and have limited bandwidth at the best of times. And responding to someone takes way more energy and cycles than to just create the incident in the first place.

There are a couple of meta-games that can be played here to control the disinformation campaigns:

Selective censorship, selectively removing content that people can see

Selective amplification, amplifying specific content that people can see

What's interesting in today's environment is that both of these also fall into the information avalanche strategy too by specifically focusing on only those points you believe further your agenda, and sweeping the rest under the rug.

The difference now is that the counterbalancing force on pushing back against these tactics don't have much strength if this is done fast enough. Speed and volume become the enemy of truth.

It's like watching a master piano player. You can't keep track of her hands across all of those keys. At some point you just believe it's all kosher and move on.

Examples: An entire presidency in a land unnamed

Blame everyone else

Covid was a masterclass in victimisation arguments and in blaming others. Of course as usual the 45th was playing 3D chess with us in that he was extraordinarily explicit in both his strategy and execution and yet somehow it worked as a persuasive message for a large enough populace. Times like this makes me believe we're in a simulation too.

But the strategy makes you strong. It makes you be like a duck and just swish the water away from your back. When you have an answer for everything (and somehow it's a highly satisfying and simplistic good-evil narrative) then you're able to push through most situations.

Similar to the repeated affirmations strategy, it's highly effective when carried through to absurd extremes and held with unshakeable will. There is an insight here too that most things in the real world are complicated enough that you're rarely to be fully blamed for everything. So your direct skeptics and you start to look alike, blaming each other, while your more fair-minded skeptics are also forced to concede some minute portion of a point to you (he's only 95% to blame, not 100%) which you can turn it around on them (look even they're saying I'm not a 100% to blame).

In a Wittgensteinian fashion this is how we reach the end of language games and can make words mean anything we want.

It's actually the most common strategy that almost everyone takes, from the toddler trying to find a way out of cleaning the toys to the CEO finding a way to blame the market for bad performance. The beauty is that since the world is complex enough, most of the arguments made in this vein actually will have some semblance of realism associated with it.

The key here is to never lose control of the narrative that you're doing the right thing, and either your opponents or the world itself is conspiring to make things look worse.

One way is also to blame everyone else for a while, then when things do get bad then you blame everyone else again but this time for their part in making you 'look bad'. This way things are always someone else's fault for doing a bad thing, or someone else's fault for making you not be able to stop a bad thing from happening.

The combination of pity and anger is powerful. It's the path to the dark side for a reason.

Examples: Covid response

Selective skepticism

This one needs you to pretend you don't know much. So, you know, not easy to do for the twitterati. The key is to relentlessly "just question" everything. Scott Alexander calls is isolated demands for rigour.

"But how do you know that?" Is the question to ask here. It can put anyone into a complete epistemic funk and slowly push through their inherent belief façade and dog deeper.

The insight here is that most people's knowledge doesn't go that deep on any topic. At most in a few conversations they will realise their reliance on pieces of information and data, or pieces of second hand knowledge. Nobody knows everything.

And those pieces are the brittle ones. Which is when the nuclear question can be unleashed. "We need to carefully examine the evidence." It sounds innocuous and it sounds sensible, something any right thinking person would do. And since most of the audience won't be able to follow through much deeper than "do they seem like they're doing the right thing", it will be difficult to argue against.

And which point you have won. It's after all impossible to prove a negative. And in our world it's impossible to fault someone for wanting to find more data or to do more analysis. It ostensibly shows they're playing the same game even while they're actually casting doubt on all aspects of it.

Examples: The fight against deficit financing economic growth

Make up a conspiracy

Perfectly demonstrated during the election stealing that was prophesied and lo, it came true. This is a tough one to pull off if you have a shred of integrity, but that's not in plentiful supply luckily enough.

There are a large number of key ingredients that go into making a conspiracy. There's plenty of theoretical research to draw from too. It's also a longer road you have to take people on before they get the payoff. It helps for them to be emotionally primed because the attunement process that happens thereafter is easier. But it's still not simple.

A large part of the discourse around online polarisation revolves around this point. It's accusing the other side of making up a story to make people believe it. Of creating facts and fiction and mixing it together until you don't know what is what, and putting all that together until some semblance of a narrative emerges. And narratives are hard to get rid of once they're lodged deep.

A key characteristic of a good conspiracy is that in the classic anti-Popperian way, they're almost impossible to disprove from the inside. From the outside you might be able to show charts and tables and evidence, but if you're inside the conspiracy they're all suspect. You show something from the media, well the source is biased. You show something from academic journals, well they can be wrong. You bring up historical facts they themselves agree on, well the interpretation can be changed. Or the motivation. Or the circumstances. Everything becomes infinitely malleable when you're on the inside.

The benefit of the strategy is also that once it is planted as a seed, it's self perpetuating. The actions of your followers create and invite reaction. And that reaction then becomes fruit for the next round. So for instance, once enough people create enormous chaos on your behalf and repeat the lies you made up, then you take their disbelief as a reason for further lack of credibility.

It's truly the best meta-strategy in here. It has two interesting characteristics. One is that it's a positive feedback loop and feeds in on itself. Your theories become the seed of a movement which you then use as the pretext for even more theories. And the second is that it is almost impossible to disprove and can only be ignored through calling upon incredulousness. Whoever said circumstantial evidence isn't enough knew this loophole I think, but it sure does seem to seep into the world an awful lot.

Example: QAnon, The election steal

III

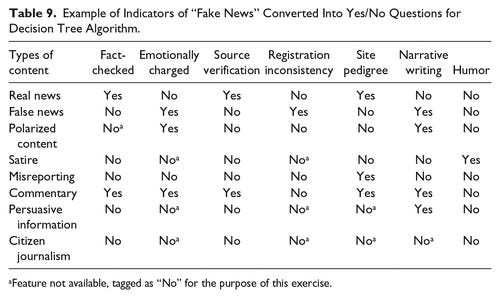

For completeness sake, there are a few other ways that disinformation frameworks have been looked at as well. One is to look at the actual spread of information vs disinformation in media channels. Another is to have a look at the motives of the perpetrators and the targets of the deception. And yet another is to create a list of the ways in which disinformation is split into its component parts and analysed atomically.

First, another method that's been tried, this time to create a landscape of Covid disinformation, tries to map the activities that took place to the agent (individual or state) and their motivation (political gain or profit). This is also helpful to analyse in hindsight, but doesn't actually extol the strategies used. It lumps the types of disinformation together.

The issue is that while this is a great way to break down the types of disinformation we see in terms of its impact, it doesn't help us look at the intent or the actual strategy. And arguably it's the intent of the disinformation and the strategy of its deployment that's of paramount interest. We can and should fill out the grid once an event is past, but at a moment in time, when looking at the cross section of all communication that's happening, how do you determine what type of disinformation, if any, is taking place?

There's also the issue that even though you could spend substantial time compiling attributes and heuristics that could lead you to better news sources, putting that in practice is next to impossible.

If you do think it can be done, let me ask you about the allegations that the 2020 US Presidential Election was stolen. It's been highly persuasive, clearly enough so that smart, educated people took it validly and stormed the US Capitol building. If they were right, this was absolutely the right thing to do. But it wasn't.

From 1937, the Institute of Propaganda Analysis wrote 7 ways of identifying propaganda which are still widely applicable. The fact that this was written almost a century ago, before TV or even the internet were pipe dreams, is incredible.

The first here helps us assess motivations in hindsight rather well. That is tremendously useful in attributing a prior credence to an individual or an organisation, and it's highly helpful as a descriptive analysis. The second option here *looks like* it could be useful, since it also kind of echoes the process we go through in our minds, but putting it into practice seems incredibly difficult. As a taxonomy and a decision tree however it is wonderful.

And the third is a perfect encapsulation of how arguments and propaganda is still done, nearly a century later. They don't however sit at the same level of hierarchy. For instance, name-calling is easily visible and can be discarded. But card stacking is a much more comprehensive strategy and encompasses both information avalanche and subtle denunciations, and might even cross over with repeated affirmations.

All that said, with this storm that's going around, how do people actually end up changing their minds or moving on? A few suggestions.

The screw it mode, when you decide that what you're mad about is not worth your action. You haven't changed your mind. You haven't fundamentally done a rational analysis on the events, edited your theory or worldview or analysed evidence. This is a pure calculus of effort to reward.

The rational calculus. Works in the margins and could percolate through the rest occasionally. But it's a long road and takes time. Here there is a much more definite overlap in reward to effort calculus, analysis of causal links deeper into the chain and efforts to learn more and educate yourself on all sorts of nuances.

The emotional appeal. Can work on larger groups but you need to find a hook into their psyche where there's overlap with your own empathetic response side. This might be the opposite of the persuasion game played to spread disinformation, and trying to push the opposite. The wide use case has also made it more used across politics and business, as it still works.

It's really difficult to think about the best ways in which minds actually do get made, though an understanding of the ways in which we are disinformed hopefully is helpful in trying to stop it when it happens, as opposed to being evident in hindsight.

Almost all of the tactics are only useful in public communication since you need the mass psychology to be on your side. A substantial proportion of political dialogue happens within the levels 3 and 4. Once Trump came out with his candidacy he started using Level 2 to great effect, and led inextricably to Level 1.

It's not inevitable that there's always a steady flow only flowing down the pyramid. Or that we can't find a counterbalance in the other direction. But in life it's useful to know the strategies your opponents use in case they help you figure out your own. One part of that is naming the problem, which is what I attempted to start here.

Most of what we think of as simple lying or disinformation is part of a strategy. And having a taxonomy helps us talk about the different types that are out there without getting too bogged down in individual narratives or so high up that we lose sight of what we're arguing against.

This is some high level stuff - very well written. Someone needs to hand you a briefcase full of money to write and leave you alone.